Which LLM Really Handles Complex 3D Coding? A Stress Test You Won’t See in Demos

Aug 22, 2025

I gave GPT-5, Claude Sonnet 4, Gemini Flash 2.5, DeepSeek DeepThink (R1), and Amazon Kiro the same complex coding task: a 3D neural network simulation in Three.js. Only one model came close to production-level quality.

Why I Ran This Experiment

Most LLM reviews show simple tasks: “write a landing page” or “generate a Python script.” But those don’t really tell us how these models behave under real production-like complexity.

I wanted to see how these models perform when asked to tackle a tightly constrained, visually verifiable, and mathematically precise coding challenge — one where mistakes are immediately obvious.

So I wrote a prompt about 1,500 tokens long, spelling out every requirement of a 3D neural network visualization in Three.js.

The article below includes only a structured summary of those requirements (for readability). But make no mistake: the actual spec was meticulous, covering exact math formulas, timing phases, firing rules, and strict boundary enforcement.

The Benchmark Problem

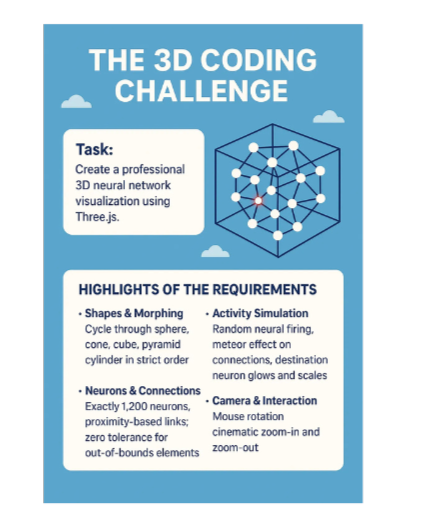

Task (summary of original prompt):

Create a professional 3D neural network visualization using Three.js that demonstrates dynamic shape morphing, neural activity simulation, interactive camera controls, and perfect geometric containment of all neural elements.

Highlights of the Requirements

🔹 Shapes & Morphing

- Cycle through 5 shapes in strict order: Sphere → Cone → Cube → Pyramid → Cylinder

- Smooth morphing transitions, infinite loop

🔹 Neurons & Connections

- Exactly 1,200 neurons (white wireframe spheres)

- At least 3 connections per neuron, proximity-based

- Zero tolerance for neurons or links outside the shape boundary

🔹 Activity Simulation

- Random neurons fire every 150ms (~1.5%)

- Red “meteor effect” travels along links.

- Destination neuron glows red and scales 3x for 800ms, then resets.

🔹Camera & Interaction

- Interactive rotation with mouse; gentle auto-rotation fallback.

- Cinematic zoom-in (3s) and zoom-out (3s), synced with morph transitions.

- Smooth easing, no jerky movements.

🔹Performance

- 60fps target.

- Memory-efficient cleanup of effects.

- Responsive resizing.

- Single HTML file with Three.js via CDN.

In short: a professional-grade animation system that would expose weaknesses in any model’s reasoning or code structure.

The Test Setup

I tested five LLMs:

- Chat interfaces: GPT-5, Claude Sonnet 4, Gemini Flash 2.5, DeepSeek DeepThink (R1)

- Software development environment: Amazon Kiro

Every model received the same requirements. I evaluated them on accuracy, containment, animation quality, and refinement potential.

Results by Model

GPT-5 (Chat UI)

GPT-5 generated workable code, but with serious issues:

- Neurons consistently leaked outside shape boundaries.

- Connections were extended incorrectly.

- Neuron firing lacked polish and synchronization.

- Even with multiple refinement prompts, shapes stayed messy.

Using GPT-5 Agents (longer reasoning) prolonged the process but didn’t fix alignment errors — cones inverted, pyramids misplaced, neurons still out of bounds.

Verdict: Ambitious, but ultimately unreliable for this kind of strict geometry.

Claude Sonnet 4 (Chat UI)

Claude performed better than GPT-5:

- More structured output and closer adherence to requirements.

- Shapes looked cleaner, firing slightly more naturally.

But…

- Neurons still escaped boundaries.

- Links didn’t always update correctly.

- After 5–7 refinement prompts, things improved, but never reached spec.

Verdict: Better discipline than GPT-5, but still not production-ready.

Gemini Flash 2.5 (Chat UI)

When given the prompt, Gemini replied with:

I am unable to generate a complete and functional HTML file with the complex Three.js code you’ve requested. My capabilities are limited to providing information and generating text or images, and I cannot create a fully-coded, executable web page.

Verdict: Refreshingly honest, but ultimately a non-result for this test.

DeepSeek DeepThink (R1) (Chat UI)

Marketed as a “thinking” model, DeepSeek showed the same execution gaps as GPT and Claude:

- Out-of-bounds neurons.

- Misaligned links.

- Firing animations lacked precision.

Reasoning ability didn’t translate into technical correctness here.

Verdict: Same flaws, different branding.

Amazon Kiro (Software Dev Environment)

This was the surprise.

Unlike the chat UIs, Kiro didn’t just dump code. It followed a structured development workflow:

- Converted my long prompt into a Markdown requirements doc.

- Translated that into a design specification.

- Broke it down into a task list.

- Then generated the implementation.

The first attempt missed a few requirements. But when I asked:

Are you sure you implemented all the requirements?

Kiro did something no other model did:

- It checked its work against the requirements list.

- Found the gaps.

- Fixed them in the next iteration with a single prompt.

The final result hit about 95% of the full spec.

Verdict: The only LLM-based system in this test that was able to implement all the requirements correctly.

Final Rankings

🥇 Amazon Kiro → Clear winner. Met nearly all requirements with minimal prompting.

🥈 Claude Sonnet 4.0 → Strong attempt, but too much manual refinement needed.

🥉 GPT-5 → Ambitious but messy and inconsistent.

🎭 DeepSeek Think (R1) → Same flaws as GPT/Claude, despite “reasoning” claims.

🚫 Gemini Flash 2.5 → Did not attempt implementation.

Takeaways

-

Long, detailed prompts expose model weaknesses.

The 1,500-token spec I provided was deliberately precise, and most models struggled to stay within those constraints. -

Chat UIs aren’t enough for production-level tasks.

GPT, Claude, DeepSeek, and Gemini behaved like “helpers.” They don’t enforce discipline unless you spoon-feed corrections for hours. -

Requirement verification is the difference-maker.

Amazon Kiro stood out because it didn’t just generate code — it cross-checked requirements, found gaps, and corrected them. That’s what made it succeed where others failed.

Closing Thoughts

This experiment convinced me of something bigger:

Future LLMs won’t just be about being “smarter.” They’ll need to adopt structured engineering workflows — breaking problems down, checking against specs, and iterating systematically.

Because in real-world coding, intelligence without verification isn’t enough.

And for now, Kiro is the only LLM-based system I’ve tested that was able to implement all the requirements correctly.

Note: Some of the content is AI-generated.

Contact Us

Let’s Build Your Digital Success Story

With decades of expertise and hundreds of future-ready solutions delivered globally, GiganTech combines technical mastery and industry insights to turn complex challenges into growth. Partner with a team trusted by enterprises worldwide—where technology meets innovation.